Overview

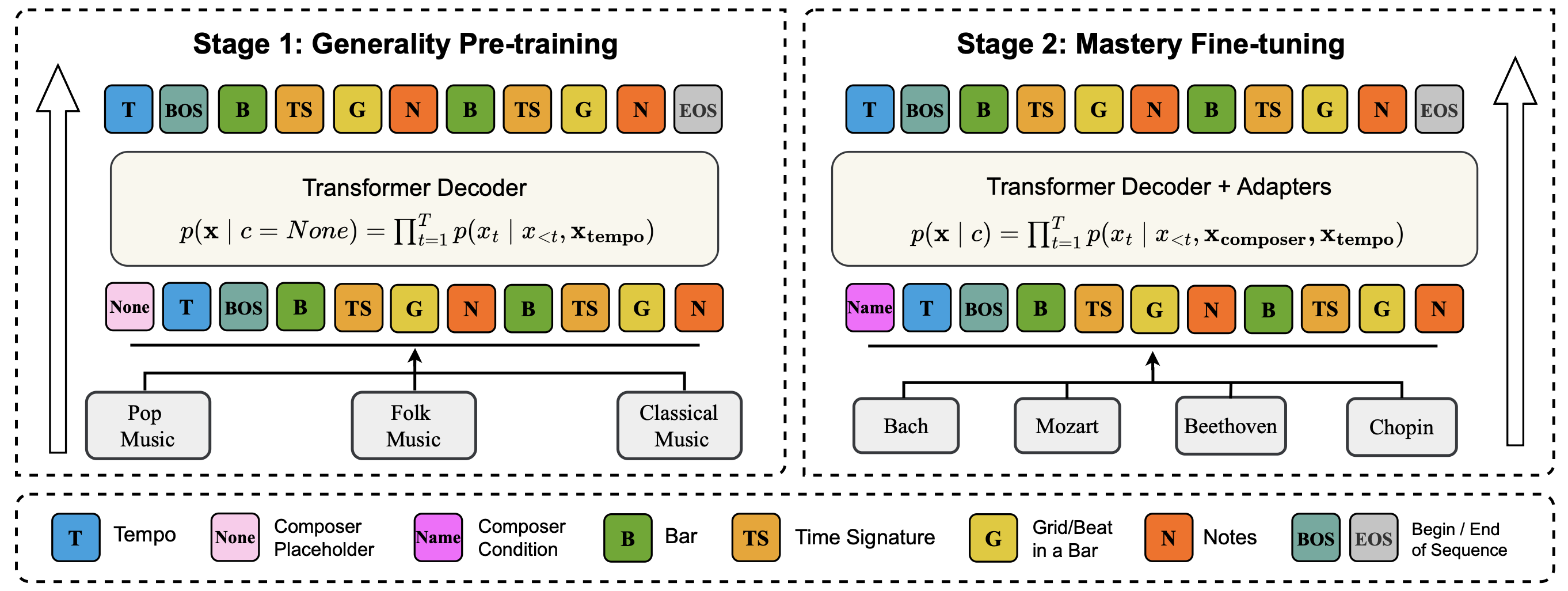

GnM (Generality → Mastery) is a two‑stage Transformer framework for symbolic music generation that first learns general musical knowledge from a large multi‑genre corpus and then specialises in the domain of four classical composers (Bach, Mozart, Beethoven, Chopin).

Key Contributions

-

Two‑Stage Training Paradigm

Stage 1 – Generality: pre‑train on 1.3 M bars from pop, folk, and classical scores to learn broad melodic, harmonic, and rhythmic patterns.

Stage 2 – Mastery: fine‑tune with lightweight adapter modules on < 1k verified scores from four target composers, conditioning generation on a composer token. - Extended REMI Representation

- New

[TS]tokens cover 2/4, 3/4, 4/4, 3/8, 6/8 (other metres are mapped by simple rules). - High‑resolution beat grids (up to 48 ticks per bar) preserve fine rhythmic nuance in every meter.

- New

- Data Efficiency

GnM reaches or surpasses state‑of‑the‑art quality with 46M parameters after introducing adapters, much smaller than comparable ABC‑notation models.

Method

Extended REMI

| Aspect | Original REMI | Extended REMI (ours) |

|---|---|---|

| Time signature | Fixed 4/4 | Five common metres + mapping rules |

| Beat resolution | 16/bar | 18–48 grids/bar (meter‑dependent) |

| Global tokens | Tempo | Tempo + Composer |

Time‑Signature Events

Each bar opens with [TS:<meter>]. Irregular metres (e.g., 5/4) are decomposed (2/4 + 3/4) to retain bar integrity.

High‑Resolution Grids

Quarter‑note metres use 12 ticks/beat. Eighth‑note metres use 6. Example: 4/4 → 48 grids/bar.

Two‑Stage Training

| Stage | Corpus / Pieces | Objective | Conditioning |

|---|---|---|---|

| Generality | 64.8 M tokens (pop + folk + classical) | Next‑token | [Tempo] |

| Mastery | 891 unique pieces (4 composers) | Next‑token | [ComposerName] + [Tempo] |

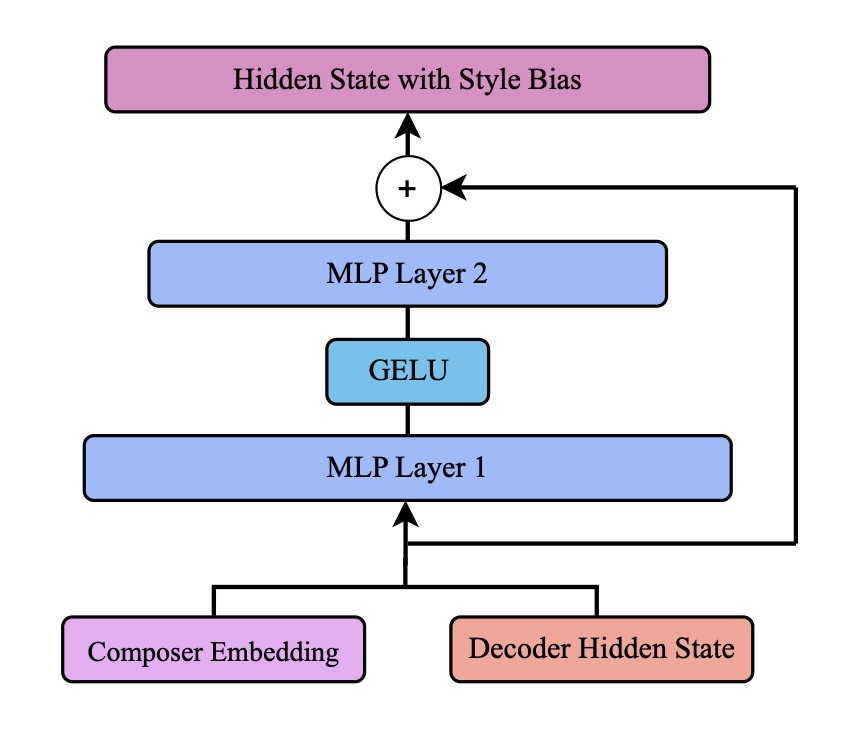

Style Adapter (inserted every other decoder layer)

Concatenates composer embedding with hidden state → 2‑layer MLP (GELU) → projection back → residual add (Fig. 2).

Results

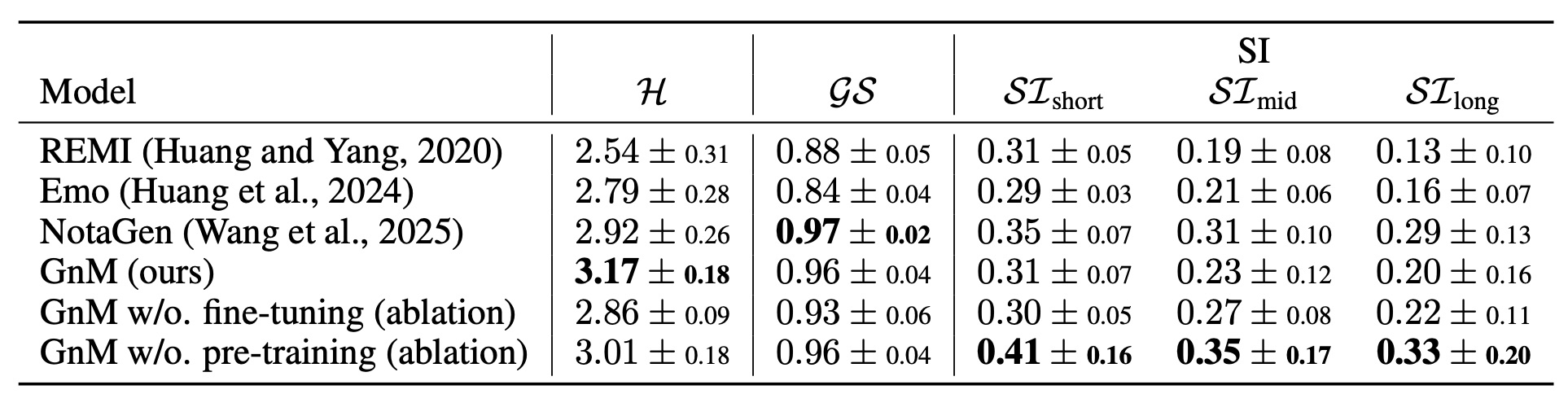

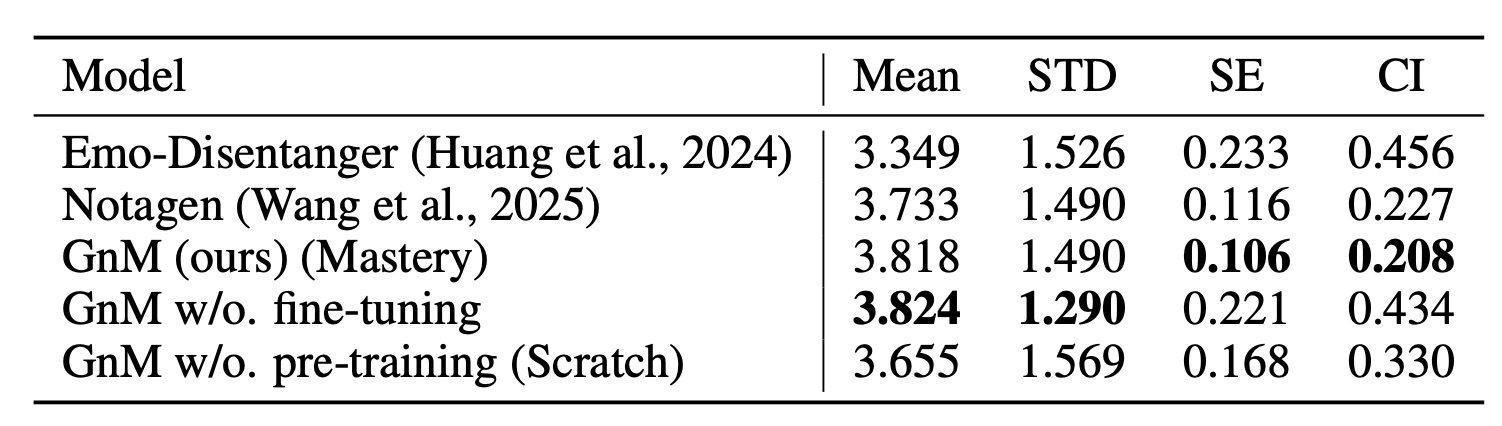

From the table, we found that

- Musicality – GnM reaches top pitch‑class entropy and grooves while maintaining competitive long‑term structure, comparable with same-period Notagen-finetuned

- Ablations – Removing the pre‑training stage would inflate repetition in generated music

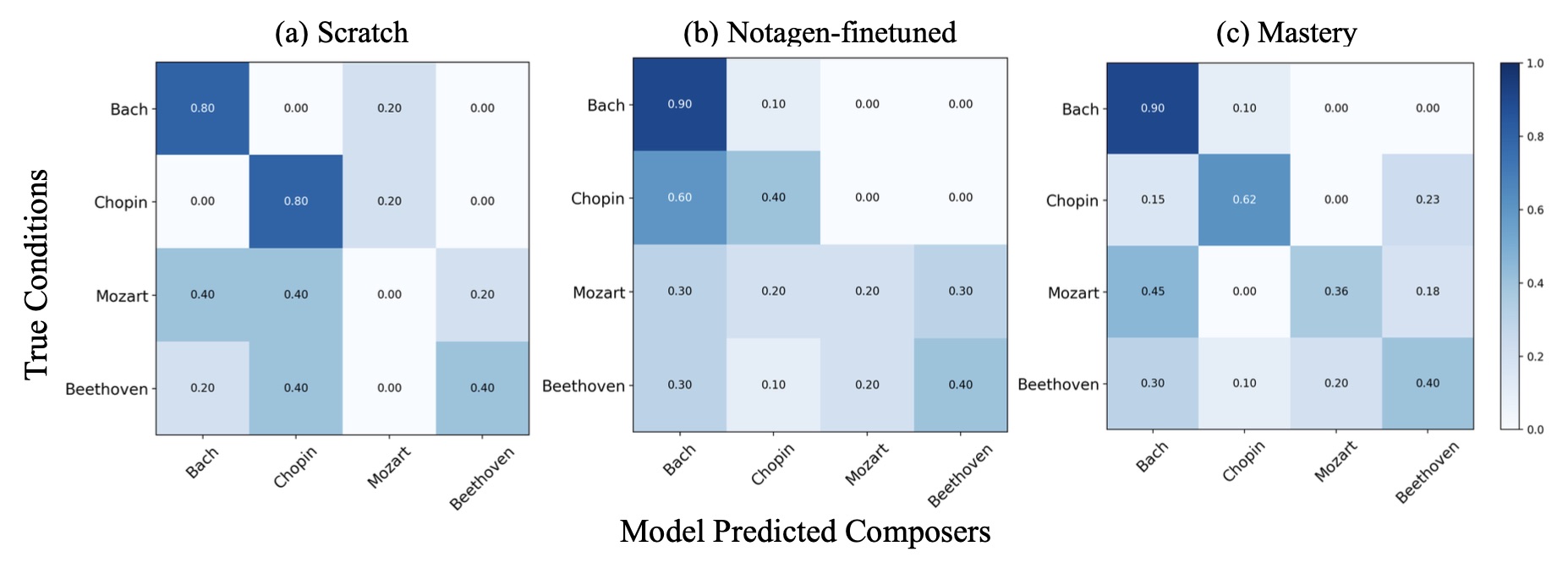

GnM Mastery exceeds NotaGen on Mozart & Beethoven, and matches on Bach & Chopin.

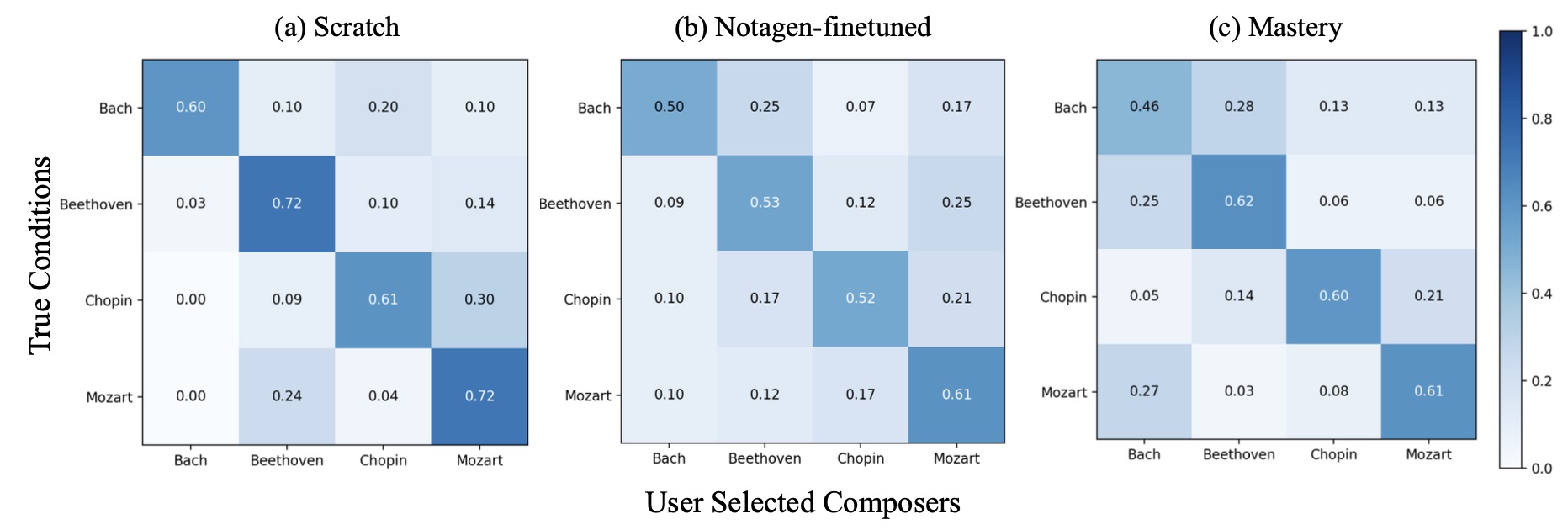

GnM (pre-training and mastery models) achieves the highest perceived musicality; listeners also distinguish its stylistic features better than the Notagen and our ablated GnM scratch (Fig. 6).

Generation Samples

We show some generation samples from multiple models for comparison on the music generation quality and their style similarities compared with the actual conditions:

- GnM (Mastery): Generality-to-mastery two-stage generation model with extended REMI representation

- Notagen-finetuned: Same period ABC-notation-based generation model, baseline

- GnM (from scratch): Generality-to-mastery one-stage generation model with extended REMI representation (Train with fine-tuned dataset from scratch)

It was found that our GnM (Mastery) model is able to produce music with high musicality and strong composer style similarity with given conditions.

GnM (Mastery) Model by Composer

| Bach | Beethoven | Chopin | Mozart |

|---|---|---|---|

Notagen-finetuned Model by Composer

| Bach | Beethoven | Chopin | Mozart |

|---|---|---|---|

GnM Scratch Model (Ablation) by Composer

| Bach | Beethoven | Chopin | Mozart |

|---|---|---|---|

GnM (Pre-training Only), No Composer

This section shows generated music by GnM with only pre-training. We found the diversity of music learned from multi-genre training data.